Chozofication

Member

- Platforms

I mean a Ps6 is at least half a decade away, so. But if a game used more than 8 cores, the E cores would at least be used after the 8 big cores are used. They're perfectly capable of helping in gaming, but they shouldn't be used until the main cores are saturated. As for when more than 8 will be needed...? Well it's anyone's guess but for the vaaaast majority of games 6 cores are enough and do you remember how long quad cores were enough? I think when more than 8 cores are beneficial for gaming, intel would probably add more big cores but not until then.Say I'm gaming and using 8 cores on my game, want multitask so also streaming in 4k. Suddenly maybe the E core can handle that with some ASIC but not sure.

Also who knows if future games will use more cores. What if nextgen consoles come with 16 cores and they are used by most developers? What are the E cores gonna do?

In addition I personally want to try some high thread count code, uniform cores work wonders as I can divide work into uniform chunks and have it run in parallel at about the same time. But what will I do with E cores? Either I have to do some threads with a small chunk of workload or just ignore the E cores.

Note that some researchers have gotten AI code to run competitive to gpus in cpus. And such code can likely eat threads for breakfast. AI is the future, and it's conceivable cpus still have room to play there.

CPU algorithm trains deep neural nets up to 15 times faster than top GPU trainers

Rice University computer scientists have demonstrated artificial intelligence (AI) software that runs on commodity processors and trains deep neural networks 15 times faster than platforms based on graphics processors.techxplore.com

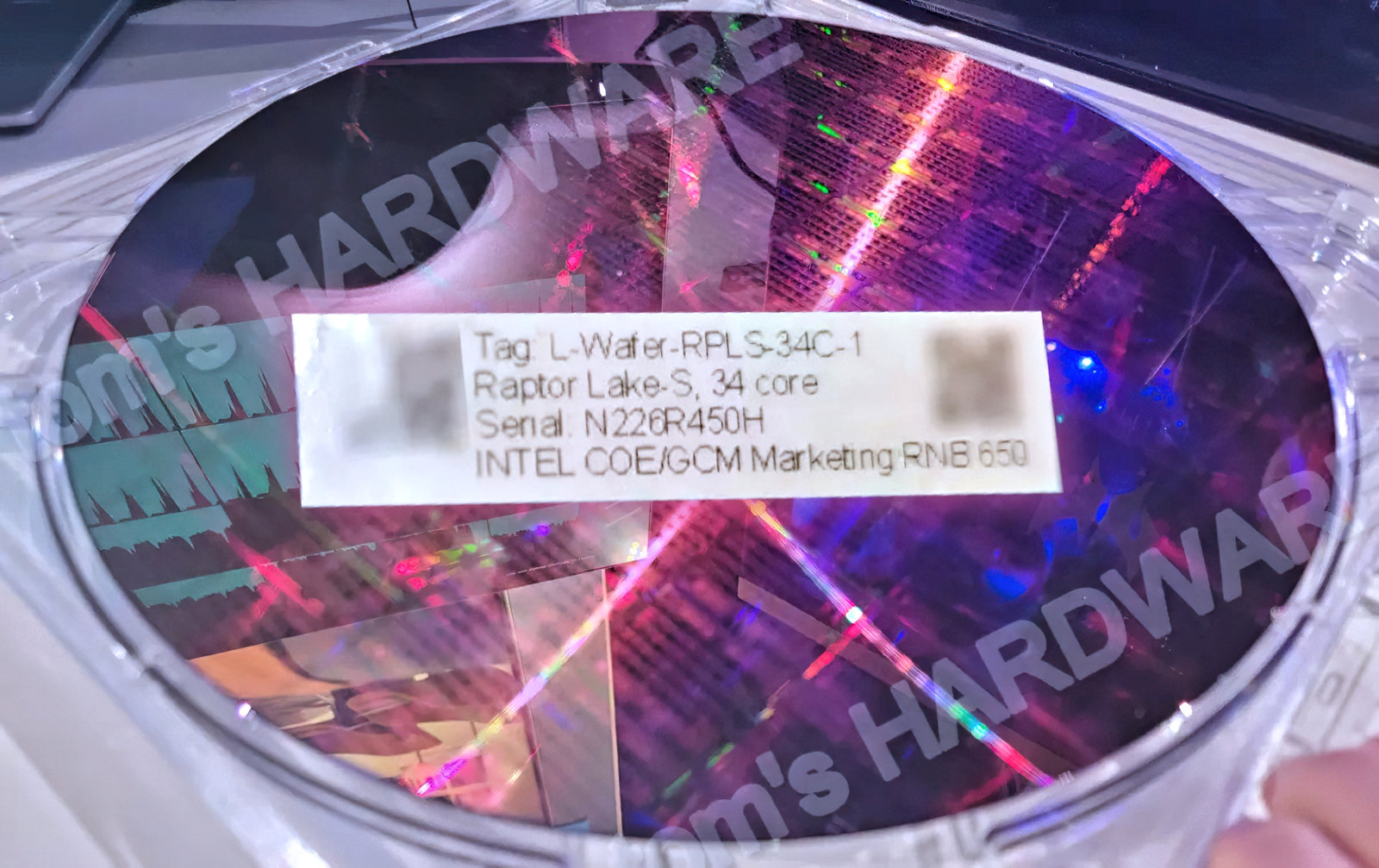

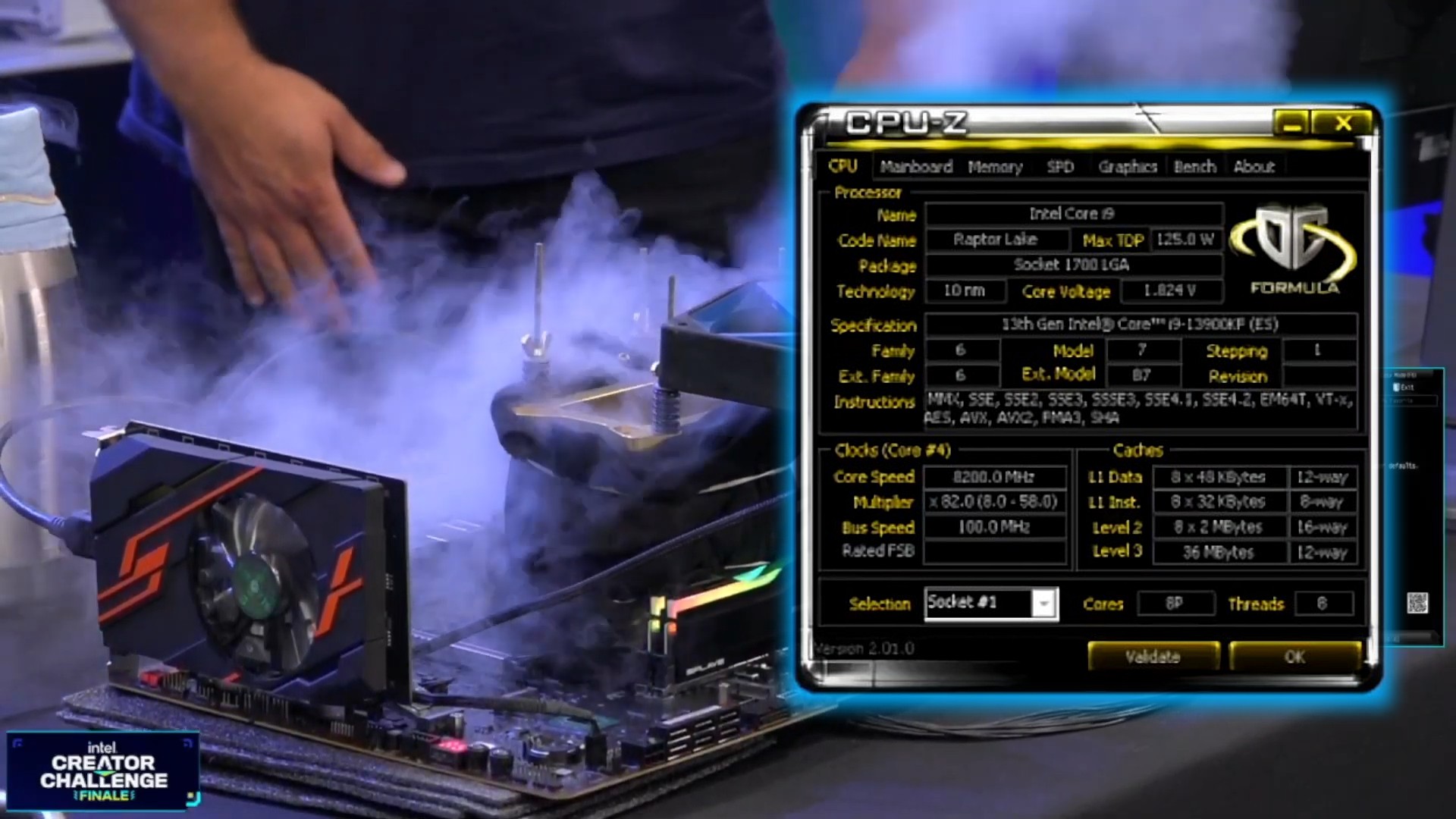

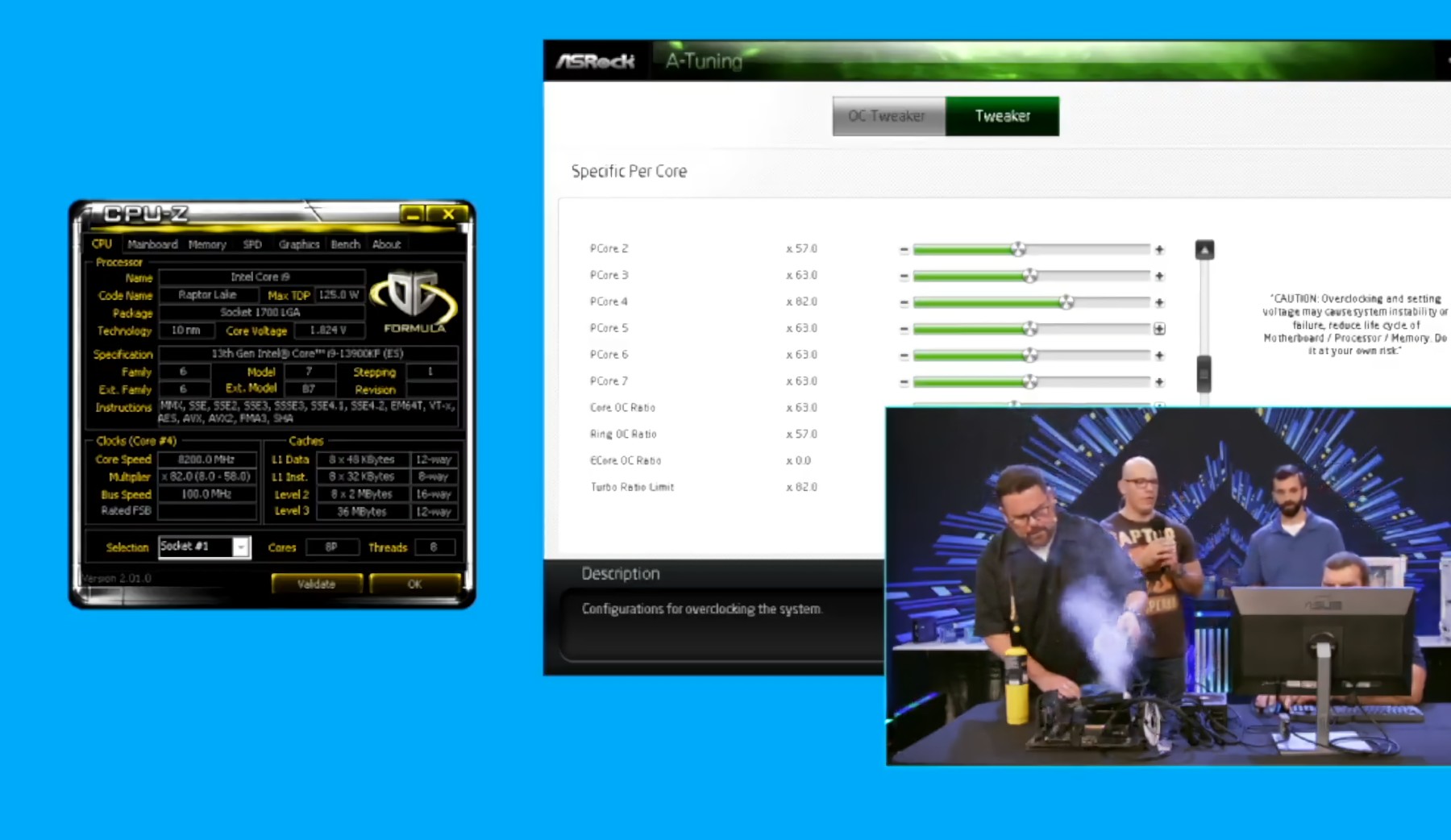

As for multicore/multitask workloads, I would say wait and see how 13900k compares with 7950x but also on the cheaper side of things, compare i5 13600k with 7600x... Both have 6 cores but the i5 has 8 E cores as well.

So y'know, on the lower end skus intel is just going to dominate for multitask stuff and it'll be interesting to see 13900k vs 7950x soon.