Just sounds unbelievable that they will completely leave the gaming market for 2 years.

Nvidia will only produce exactly as much as they have to, to fulfill the minimum quantities from the contracts with their suppliers.

Just sounds unbelievable that they will completely leave the gaming market for 2 years.

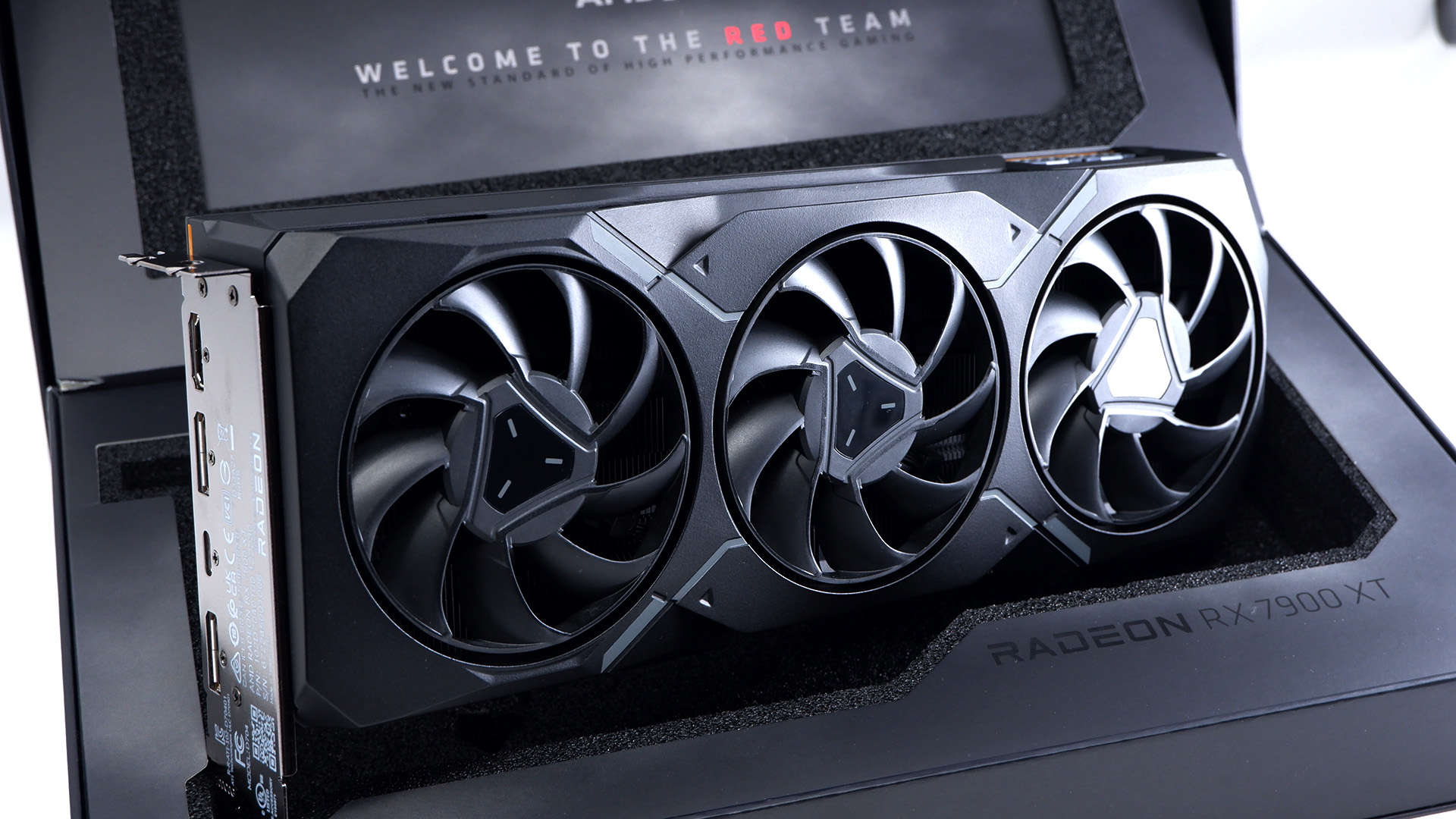

AMD is not interested in being #1 apparently. They do not think they can constantly keep up with the expectations of being #1. They prefer to ride the market out being a secondary choice.

Their mistake is that they want to charge as if they are #1. But the market quickly corrects their prices (unlike nVidia).

As for their high end 8000 series / rdna 4, they probably wanted to have the GCD being chiplets and are having issues getting it working like they want. So they simply ditch it and hope to fix things for RDNA 5.

I'm pretty sure they want to be #1

They just can't. If its lack of funding or not that's nothing that I know for certain, but they lack better engineers.

If consoles didn't use amd then amd wouldn't exist today.

Say what you will about nvidia, but until the crypto boom nvidia really kept moving the needle.

Post crypto they just don't care anymore. Amd just sucks and everyone buys nvidia even when nvidia put out less effort, and they are still better than amd.

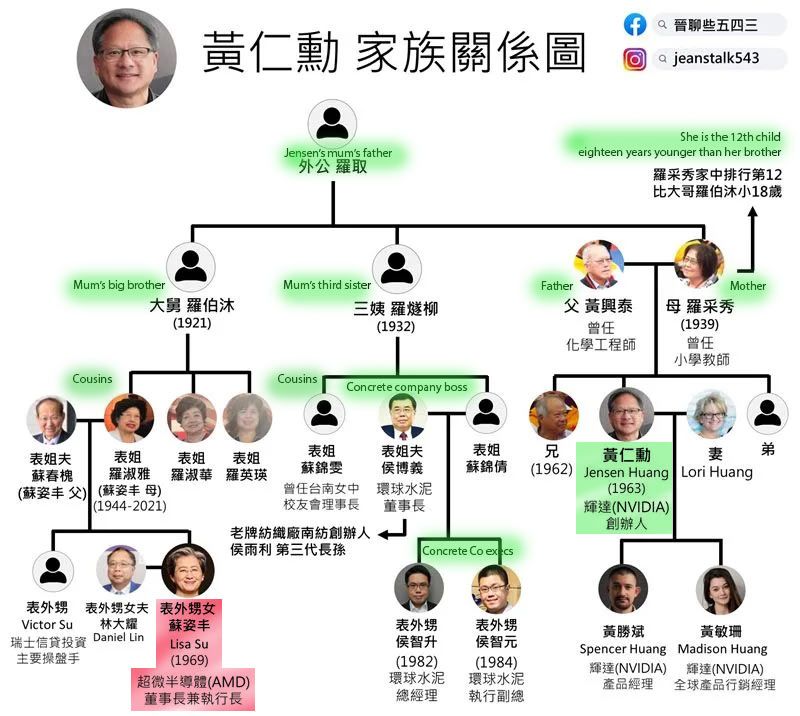

The fact it is rumored the ceos of nvidia and amd are blood related, always makes me consider the possibility that they're acting as an oligopoly.AMD is not interested in being #1 apparently. They do not think they can constantly keep up with the expectations of being #1. They prefer to ride the market out being a secondary choice.

Their mistake is that they want to charge as if they are #1. But the market quickly corrects their prices (unlike nVidia).

As for their high end 8000 series / rdna 4, they probably wanted to have the GCD being chiplets and are having issues getting it working like they want. So they simply ditch it and hope to fix things for RDNA 5.

The fact it is rumored the ceos of nvidia and amd are blood related, always makes me consider the possibility that they're acting as an oligopoly.

I'm pretty sure they want to be #1

They just can't. If its lack of funding or not that's nothing that I know for certain, but they lack better engineers.

If consoles didn't use amd then amd wouldn't exist today.

Say what you will about nvidia, but until the crypto boom nvidia really kept moving the needle.

Post crypto they just don't care anymore. Amd just sucks and everyone buys nvidia even when nvidia put out less effort, and they are still better than amd.

Its not just a case of cost, Nvidia soured their relationships with both Microsoft and Sony for being unfortunately a shitty partner to work with, as pretty much anyone who has worked with them can attest to and normally drop them shortly afterwards, look at Apple, EVGA etc... (except Nintento for some reason).Consoles use AMD hardware because AMD is willing to deliver adequate performance within the budget constraints of the console space, have the tech and production capacities for the needed APUs. Nvidia doesn't want to do it for the price, so it's good that we have AMD filling that space because we can say what we want about the consoles, they're incredible for the comparatively low costs.

Its not just a case of cost, Nvidia soured their relationships with both Microsoft and Sony for being unfortunately a shitty partner to work with, as pretty much anyone who has worked with them can attest to and normally drop them shortly afterwards, look at Apple, EVGA etc... (except Nintento for some reason).

Outside of that AMD definitely have a technology/IP advantage when it comes to APUs, partially because consoles are such a large part of their graphics portfolio they focus their designs around silicon area efficiency, low power envelopes etc... they do this from the initial design phase of their graphics IP rather than designing something for desktop and then trying to scale it down afterwards.

Of course their APUs are also used in Laptops, Steamdeck and other handhelds, embedded into cars etc...

I don't disagree with the rest of your post though, you are spot on there.

AMD has been growing a lot the last 7 years or so and they are much better funded and have way more staff than before. However they are still much smaller than Nvidia or Intel and Radeon group is a smaller subset of AMD again.

In addition to that they have a lot of tech debt from their near bankrupt years that they need to catch up on and ramping up new teams and new staff in something as complex as silicon/graphics design/validation is incredibly difficult. It often takes more than a year for someone to be trained up and to start properly contributing.

One of the ways that AMD competes with much larger companies like Intel or Nvidia is that they are always on the bleeding edge of silicon/packaging technology. For example jumping to advanced nodes before Nvidia with TSMC, they were even doing this long before RDNA was a thing, sometimes it bit them in the ass in the past.

However this is the main reason they are able to compete with these larger companies. They are the world leaders in MCM chip/silicon design and disaggregation as Zen has easily shown. On top of that their new MI300 is absolutely insane from a design and packaging perspective, the fact that they are able to release something so mental is a testament to that. I think @Ascend is right that in gamer land they often don't get the praise they deserve for always being on the bleeding edge technologically when it comes to their silicon designs.

For RDNA3 they tried to do something crazy and unprecedented in consumer graphics. Datacenter stuff is easier because it is intended for compute so you can create multiple dies and connect them together more easily because they don't have to handle graphics.

Unfortunately they tried to do too much for RDNA3 by both doing a massive redesign of the underlying architecture/CU etc... while also disaggregating the GPU components (MCM approach) via their advanced packaging know how.

Long story short a bug that they missed fucked up RDNA3. It was supposed have 15-20% better performance across the board while drawing less power than it ended up drawing. They realised way too late to fix it before release, the kind of fix it needed would require full re-tape out which would cost money, resources and manpower and would take 6-8 months to complete.

They decided to cut their loses, reassign their valuable validation engineers to the next generation (RDNA4). So we ended up getting a somewhat gimped RDNA3 compared to what is was intended to be.

Remember that had their plans worked out N31 would be nipping at the heels of 4090 for much cheaper with a smaller die and they wouldn't have had to drop the rest of the lineup down a tier.

Moving on to RDNA4, the current rumours don't look good for Radeon group right now. Again they were trying to do something insane for their high end RDNA4 dies, some crazy MCM type stuff with multiple Shader Engine Dies (SED) tiles. Kind of like some of the madness they have done with MI300.

Supposedly (take all these rumours with a grain of salt) to get the top dies working properly would take much longer than they initially forecast due to the novel disaggreated nature of them in consumer graphics.

I've heard different reasons why this is, some people say it was a skill issue and the task was just too difficult for them to handle, others say they don't have a problem doing it but the roadmap has a super aggressive time to market window that the top brass don't want to miss. Either way the supposed idea is that the time it would take them to do it right would push the RDNA4 release too far back that it would end up being too close to RDNA5.

So they decided to cancel N41 and N42 instead (the two disaggregated dies that had multiple SED tiles) and will only release instead N43 and N44 midrange parts.

Supposedly they have deferred their multi SED die type frankenstein type designs for high end RDNA5. I don't know if that is just cope from AMD fanboy types or not but we will have to wait and see. I don't care about jumping on these hype trains for something that won't release until 2026.

I like AMD pushing the silicon and how they are willing to take risks. From an innovation and risk perspective, I don't think they do more than Nvidia though. I'd say they are more or less on the same innovation level hardware wise, with Nvidia going all in on AI and raytracing with dedicated hardware on their GPUs, which was a bold move. Their cards are always comparable in raster performance and trade blows, with AMD even being stronger in many cases. In the other aspects like image reconstruction and RT, they're a generation behind, unfortunately.

The 4060 ti 16gb is such a shitty card it's unbelievable. Nvidia fucked up on the lower end this gen, hard.

So I've been looking for GPUs, and I think I'm gonna sell my kidney for a MSI GeForce RTX 4070 VENTUS 3X OC so Jensen can buy a new leatherjacket.

God damn I hope it is worth it.

My machine just random crashes with my 2070s so I just hope the money spend on the gpu was better than on a psychiatrist in case my machine still crashes.

I think you'll like it. Frame gen is legit feature of the 40 series.

Uhm yeah. The raytracing support for AMD cards in Ratchet & Clank just arrived.

You'd think that the game being developed for AMD hardware initially and how there's no crazy expensive RTGI or path tracing going on, AMD GPUs would perform well here.

Maxed 4k even with FSR2 Quality mode, a 7900XTX struggles hard. Fluctuating between 25 and 80+ fps. You can't lock the game at 30fps.

Like most Sony 1st party games that get ported to PC, isn't R&C Nvidia sponsored? Even though these games were originally designed for AMD consoles the PC branch of the code is generally modified to be optimized for Nvidia GPUs in these cases.

Given that Sony doesn't use Direct X and therefore doesn't use DXR, they have their own API. It probably makes sense that replacing the entire RT implementation with DXR would result in different performance results?

Of course the RT implementation could just be shit? I have no idea but I figured it was worth mentioning.

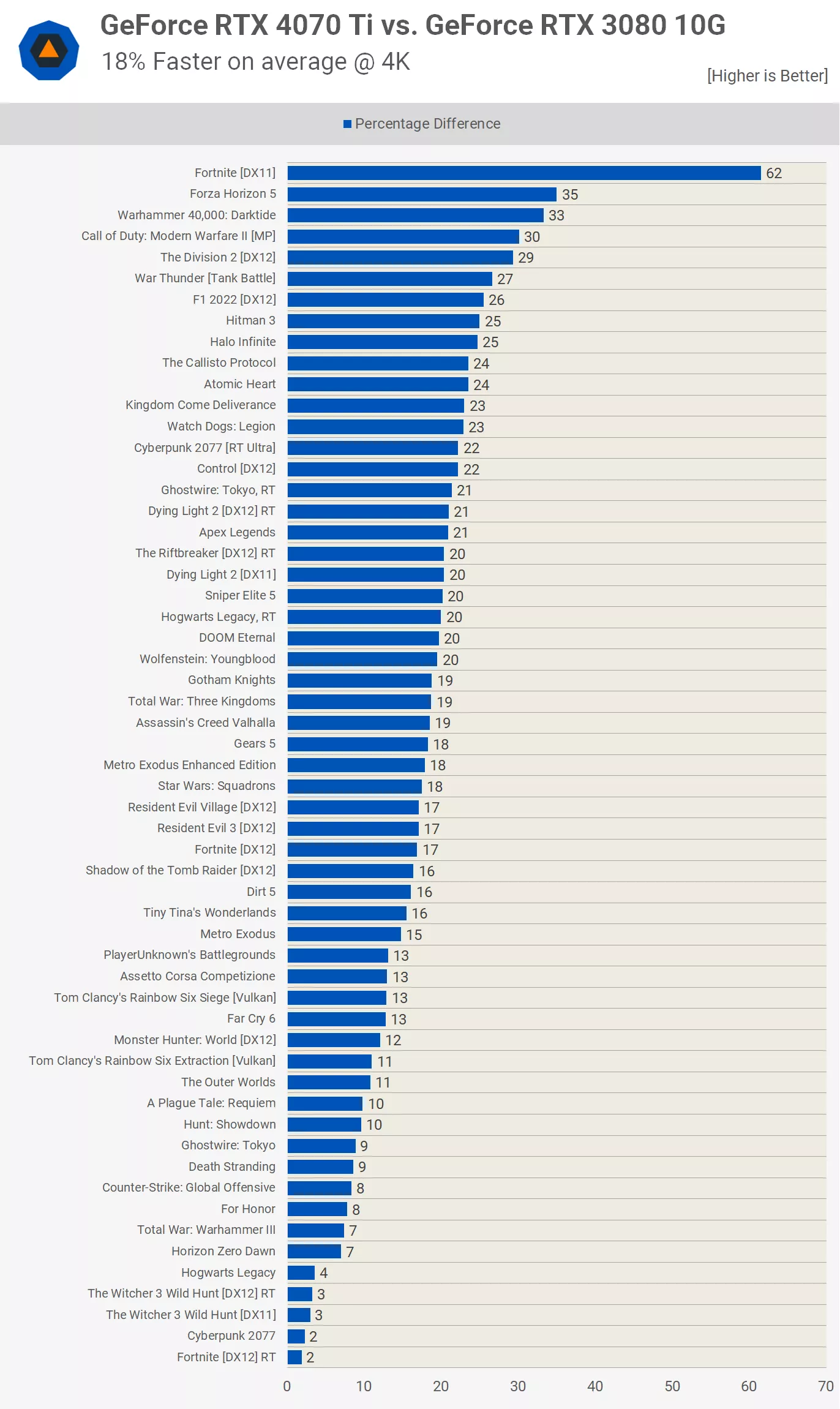

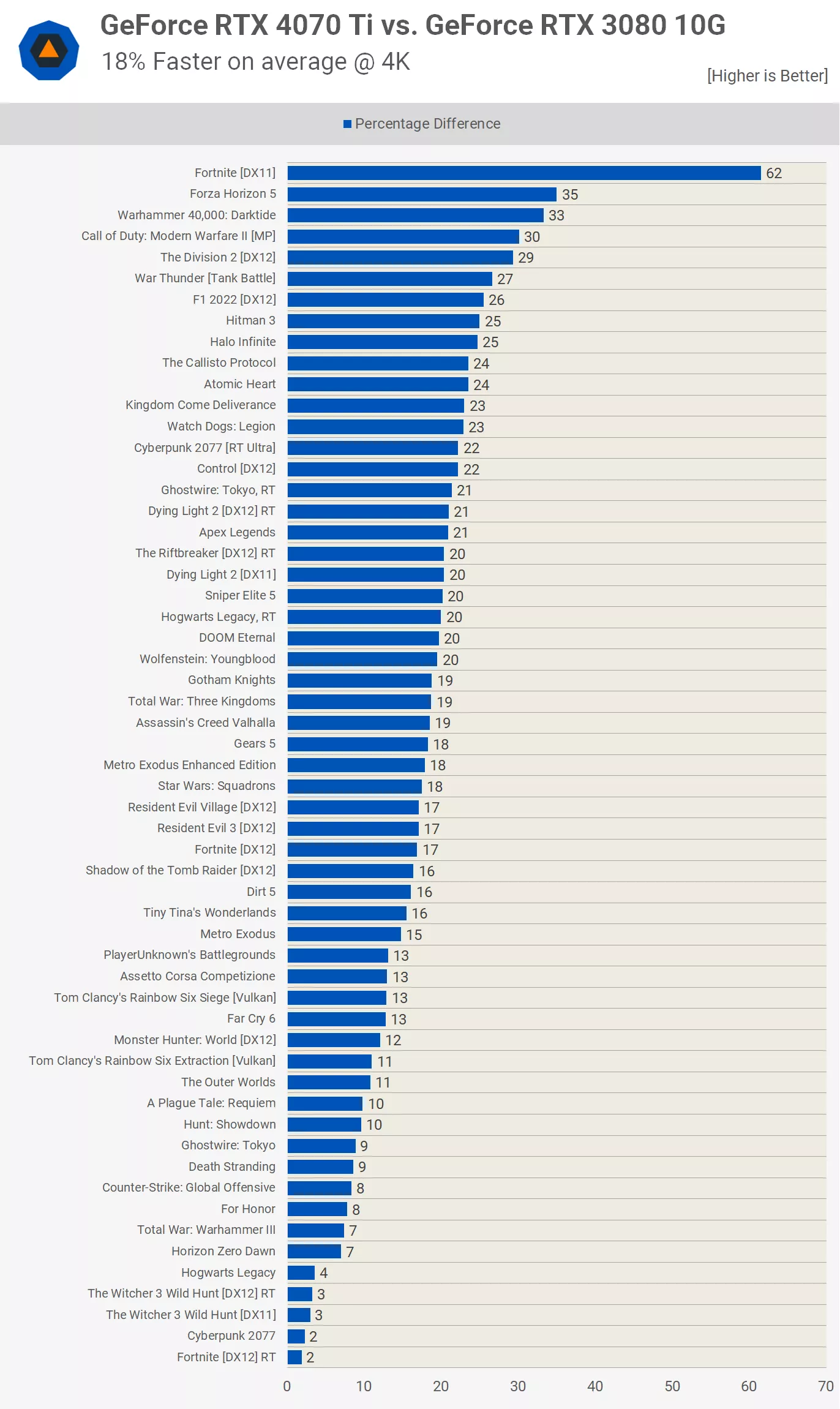

I just upgraded my monitor to a 4K/144Hz one and my 3080/10 GB is really struggling. That 10 GB really sucks now.

I have a feeling that the 4070Ti with 12 GB would still to little to justify the upgrade. Do you guys agree?

Can't justify a 4080/4090 at their price points at the moment...

Uhm yeah. The raytracing support for AMD cards in Ratchet & Clank just arrived.

You'd think that the game being developed for AMD hardware initially and how there's no crazy expensive RTGI or path tracing going on, AMD GPUs would perform well here.

Maxed 4k even with FSR2 Quality mode, a 7900XTX struggles hard. Fluctuating between 25 and 80+ fps. You can't lock the game at 30fps.

Can't you just cap framerate in amd software like with nvidia?

I just upgraded my monitor to a 4K/144Hz one and my 3080/10 GB is really struggling. That 10 GB really sucks now.

I have a feeling that the 4070Ti with 12 GB would still to little to justify the upgrade. Do you guys agree?

Can't justify a 4080/4090 at their price points at the moment...

The halt in 40xx cards seems to be real and already kicking in.

All 4070s are out of stock in my country and has to get ordered.

I can first expect to see my msi 4070 august 17.

The struggle is real.

I hope he's wrong about it, but JayzTwoCents brought up something to keep an eye on: another potential GPU shortage because of the rising interest in AI.

Where? I took another brief look over in Europe and stock seems plentiful. The Ventus OC you were eyeing in Germany, for example:

MSI GeForce RTX 4070 Ventus 3X 12G OC ab € 776,63 (2024) | Preisvergleich Geizhals Deutschland

✔ Preisvergleich für MSI GeForce RTX 4070 Ventus 3X 12G OC ✔ Bewertungen ✔ Produktinfo ⇒ Modell: NVIDIA GeForce RTX 4070 • Speicher: 12GB GDDR6X, 192bit, 21Gbps, 1313MHz, 504GB/s • Takt Basis: 1… ✔ PCIe ✔ Testberichte ✔ Günstig kaufengeizhals.de

It's up on Amazon for the UK.

It's not Nvidia sponsored from what I know. They obviously use it for their marketing because it has DLSS and stuff, but there's no cooperation or tech support from what I've seen. It's three RT effects at the same time and the more complex the RT gets, the more we nee AMD cards struggle, no matter the game. When it's only reflections and maybe shadows, AMD cards hold up ok. Add RTAO or RTGI, they can't deal with it well anymore, it's just their cards not being great for RT.

The 4070 ti is a perfect 1440p card. At 4k, it's still 18% faster on average than a 3080, so not that much. But it depends on the game. DLSS3 can make it worth though.

I live in Denmark, where it seems to be in a remote stock somewhere (I don't know if it's the correct work to English.)

Yeah. Checked a few places out of curiosity to see what the situation's like there, the more affordable models seem to be in stock at suppliers/warehouses with delivery times between 1-8 days while more expensive models are available in store for immediate pickup/delivery.

Where did you make this chart?

www.techspot.com

www.techspot.com

I hope he's wrong about it, but JayzTwoCents brought up something to keep an eye on: another potential GPU shortage because of the rising interest in AI.

Where? I took another brief look over in Europe and stock seems plentiful. The Ventus OC you were eyeing in Germany, for example:

MSI GeForce RTX 4070 Ventus 3X 12G OC ab € 776,63 (2024) | Preisvergleich Geizhals Deutschland

✔ Preisvergleich für MSI GeForce RTX 4070 Ventus 3X 12G OC ✔ Bewertungen ✔ Produktinfo ⇒ Modell: NVIDIA GeForce RTX 4070 • Speicher: 12GB GDDR6X, 192bit, 21Gbps, 1313MHz, 504GB/s • Takt Basis: 1… ✔ PCIe ✔ Testberichte ✔ Günstig kaufengeizhals.de

It's up on Amazon for the UK.

I was under the impression that Sony had some kind of deal with Nvidia for GeForce Now which meant that most of their PC releases were Nvidia sponsored (unless specifically sponsored individually by AMD/Intel like TLOU).It's not Nvidia sponsored from what I know. They obviously use it for their marketing because it has DLSS and stuff, but there's no cooperation or tech support from what I've seen. It's three RT effects at the same time and the more complex the RT gets, the more we nee AMD cards struggle, no matter the game. When it's only reflections and maybe shadows, AMD cards hold up ok. Add RTAO or RTGI, they can't deal with it well anymore, it's just their cards not being great for RT.

Moore's Law Is Dead has become a very reliable leaker tbh. And this vid has awesome info.

It's really just a 4050 ti which would be great if it were called that and cost $300 or less, then $250 or less for the 8gb model. 4070 should be a 4060 for $400, 4080 a 4070 for $600 and some new card for the 4080 in between 4080 and 4090.The 4060 ti 16gb is such a shitty card it's unbelievable. Nvidia fucked up on the lower end this gen, hard.

Hear there are rumors of super revisions on horizon with far better specs for similar priceIt's really just a 4050 ti which would be great if it were called that and cost $300 or less, then $250 or less for the 8gb model. 4070 should be a 4060 for $400, 4080 a 4070 for $600 and some new card for the 4080 in between 4080 and 4090.

4060 ti being branded as such and for that price is really a shame as the 1050 ti was a legendary card for the money ; $130-40 for the 4gb model.

@Ascend I regularly skim through MLID videos for news on intel.

Hear there are rumors of super revisions on horizon with far better specs for similar price